iOS 2D Liveness Detection SDK (v2.1.2) User Guide

Development Preparation

- Contact us to register an account.

Overview

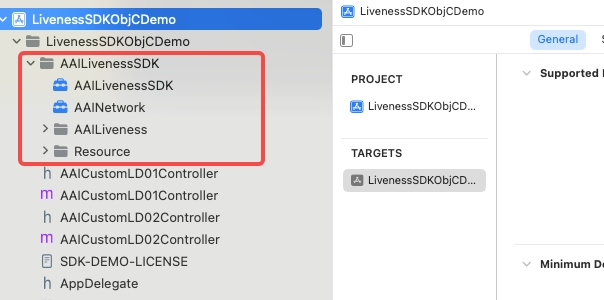

AAILivenessSDK contains four modules, the core module AAILivenessSDK.xcframework, the UI module AAILiveness, the resource module Resource, the network module AAINetwork.xcframework.

The actual total size of the SDK is about 6.5MB(arm64, disable bitcode).

AAILivenessSDK.xcframework

This is a core module, usually, only this module will be updated when the SDK is updated, the external open source code(

AAILiveness,Resource) will not be modified. The actual total size of the this module is about 3.9MB(arm64, disable bitcode). See FAQ for how to strip the bitcode.AAILiveness

This is a UI module, SDK UI is implemented in this module and it's open source, if necessary, you can modify the code here. See Customizable UI and FAQ for how to customize the UI.

Resource

This resource module contains images, multi-language files, audio files, and model files. The UI module

AAILivenessdepends on this resource module, during liveness detection, the UI module will load related images and play audio. Note that the core moduleAAILivenessSDK.xcframeworkonly depends onAAIModel.bundle. This resource folder is about 2MB.AAINetwork

A base network library that AAI SDKs rely on. The actual total size of the this module is about 0.6MB(arm64, disable bitcode).

SDK requirements and limitations as below:

- Minimum iOS version: iOS 9.0

- Additional dependent third-party libraries:

None - Supported CPU architectures:

arm64,x86_64 - SDK package size:

6.5MB(arm64, disable bitcode) - Supported bitcode:

NO - Supported languages:

en,zh-Hans,id,vi,th,es,ms,hi - Use-permissions:

NSCameraUsageDescription,NSMotionUsageDescription

Compliance Explanation

See Compliance Explanation for more details.

Migration Guides

When migrating from older SDK to 2.0.1, you need to refer the document to reintegrate the SDK.

When migrating from 1.2.9 to 1.3.0, you need to add the system library

Accelerate.frameworkand replace all of the SDK files.When migrating from 1.2.8 to 1.2.9, you need to replace all of the SDK files("AAILiveness" folder, "AAILivenessSDK.xcframework" folder, "Resource" folder).

When migrating from 1.2.7 (or lower) to 1.2.8 (or higher), you need to add the system library

libz.tbdand replace all of the SDK files.From 1.2.3 or higher,

AAILivenessViewControllerprovides some public interfaces and properties, when upgrading to this version, you should use its public interfaces to implement your custom logic.If your SDK version is lower than 1.2.0, then after migrating to 1.2.0 or higher, you need to do:

- Change the parameters of the

onDetectionCompletemethod fromNSDictionarytoAAILivenessResult. - Add

AAIModel.bundleresource. - Call the

[AAILivenessSDK configModelBundlePath:]method to configure the path to theAAIModel.bundlefile. You can refer toviewDidLoadmethod ofAAILivenessViewController.mfor more details.

- Change the parameters of the

We recommend you read the change logs to see what has been updated in the new version of the SDK.

Run demo project

The demo project is included in the SDK compressed package. Download the AAILivenessSDK and extract it, then navigate to the directory of

AAILivenessSDKObjCDemoorAAILivenessSDKSwiftDemoproject and install the dependencies:pod installOpen xcworkspace file in Xcode.

Specify your market:

[AAILivenessSDK initWithMarket:AAILivenessMarket<yourMarket>];Specify license content and start SDK, the license content is obtained by your server calling our openapi.

Installation

CocoaPods

Specify the SDK name and url in the podfile:

pod 'AAINetwork', :http => 'https://prod-guardian-cv.oss-ap-southeast-5.aliyuncs.com/sdk/iOS-libraries/AAINetwork/AAINetwork-V1.0.2.tar.bz2', type: :tbzpod 'AAILiveness', :http => 'https://prod-guardian-cv.oss-ap-southeast-5.aliyuncs.com/sdk/iOS-liveness-detection/2.1.2/iOS-Liveness-SDK-V2.1.2.tar.bz2', type: :tbzInstall the dependencies in your project:

pod installAdd camera usage description in Info.plist as bellow. Ignore this step if you have added those.

<key>NSCameraUsageDescription</key><string>Use the camera to detect the face movements</string>Refer to demo project to see how to use the SDK.

Manually

Download the AAILivenessSDK and AAINetwork, then uncompress them and add

AAILivenessSDKfolder,AAINetwork.xcframeworkto your project:

Choose "TARGETS -> General" add the following system libraries and frameworks in the

Frameworks, Libraries, and Embedded Contentsection:libz.tbdlibc++.tbdAVFoundation.frameworkCoreMotion.frameworkSystemConfiguration.frameworkCoreTelephony.frameworkAccelerate.frameworkMetal.frameworkMetalKit.framework

Choose "TARGETS -> General -> Frameworks,Libraries,and Embedded Content", then set

AAILivenessSDK.xcframeworkandAAINetwork.xcframework's Embed as "Embed & Sign".Add camera and motion sensor (gyroscope) usage description in

Info.plistas bellow. Ignore this step if you have added those.<key>NSCameraUsageDescription</key><string>Use the camera to detect the face movements</string>

Usage

Import SDK and initialize with your

market:// For pod integration@import AAILiveness;/*// For manually integration#import <AAILivenessSDK/AAILivenessSDK.h>#import "AAILivenessViewController.h"#import "AAILivenessFailedResult.h"*//*If you need the SDK as a global service,you should use the initialization method `initWithMarket:isGlobalService:`and pass YES to the `isGlobalService` parameter.*/[AAILivenessSDK initWithMarket:AAILivenessMarket<yourMarket>];// Configure SDK/*// Set the size(width) of `img` in `AAILivenessResult`. Image size(width) should be in range [300, 1000], default image size(width) is 600(600x600).[AAILivenessSDK configResultPictureSize:600];*//*// Set whether to detect face occlusion. The default value is NO.[AAILivenessSDK configDetectOcclusion:YES];*//*AAIAdditionalConfig *additionalConfig = [AAILivenessSDK additionalConfig];// Set the color of the round border in the avatar preview area. Default is clear color.// additionalConfig.roundBorderColor = [UIColor colorWithRed:0.36 green:0.768 blue:0.078 alpha:1.0];// Set the color of the ellipse dashed line that appears during the liveness detection. Default is white color.// additionalConfig.ellipseLineColor = [UIColor greenColor];// Set the difficulty level of liveness detection. Default is AAIDetectionLevelNormal.// Available levels are AAIDetectionLevelEasy, AAIDetectionLevelNormal, AAIDetectionLevelHard// The harder it is, the stricter the verification is.// Note that this method must be called before "configLicenseAndCheck:", otherwise it won't take effect.// additionalConfig.detectionLevel = AAIDetectionLevelEasy;*/Configure SDK license and show SDK page:

// The license content is obtained by your server calling our openapi.NSString *checkResult = [AAILivenessSDK configLicenseAndCheck:@"your-license-content"];if ([checkResult isEqualToString:@"SUCCESS"]) {// license is valid, show SDK pageAAILivenessViewController *vc = [[AAILivenessViewController alloc] init];/*// Custom motion sequences.// The number of `detectionType` is 2 in default `detectionActions` array and the order of motions is random.// You can also specify the motion detection sequence yourself,// as the sequence and number are not fixed.vc.detectionActions = @[@(AAIDetectionTypeBlink), @(AAIDetectionTypeMouth), (AAIDetectionTypePosYaw)];*//*// Set action detection time interval. Default is 10s.// Note that this value represents the timeout for an action,// not the total timeout for all actions.vc.actionTimeoutInterval = 10;*//*// Specify which language to use for the SDK.// The languages currently supported by sdk are as follows:// "en" "id" "vi" "zh-Hans" "th" "es" "ms" "hi"vc.language = @"id";*//*/// Set the timeout for prepare stage, default is 50s.//// This value refers to the time from when the sdk page is displayed to when the motion detection is ready.// For example, after the sdk page is presented, if the user does not hold the phone upright or put the face in the detection area,// and continues in this state for a certain period of time, then the `detectionFailedBlk` will be called,// and the value of the "key" in errorInfo is "fail_reason_prepare_timeout".vc.prepareTimeoutInterval = 50;*//*/// Whether to allow to play prompt audio, the default is YES.vc.playAudio = NO;*//*/// Whether to display animation images, the default is YES. If you set NO, then animation images will be hidden.vc.showAnimationImg = NO;*//*/// Whether to mark the action of tapping back button as "user_give_up". The default is NO. If you set YES, the `detectionFailedBlk`/// will be called when the user taps the top left back button while liveneness detection is running.vc.recordUserGiveUp = YES;*/vc.detectionSuccessBlk = ^(AAILivenessViewController * _Nonnull rawVC, AAILivenessResult * _Nonnull result) {// Get livenessIdNSString *livenessId = result.livenessId;// The best quality face image captured by the SDK. Note that the default image size is 600x600.UIImage *bestImg = result.img;CGSize size = bestImg.size;NSLog(@">>>>>livenessId: %@, imgSize: %.2f, %.2f", livenessId, size.width, size.height);// A small square image containing only the face area, image size is 300x300.UIImage *fullFaceImg = result.fullFaceImg;// Do something... (e.g., call anti-spoofing api to get score)[rawVC.navigationController popViewControllerAnimated:YES];};/*// If you don't implement the `detectionFailedBlk`, the SDK will go to the `AAILivenessResultViewController` when liveness detection fails.// You can implement this block to go to your custom result page or process error information.vc.detectionFailedBlk = ^(AAILivenessViewController * _Nonnull rawVC, NSDictionary * _Nonnull errorInfo) {AAILivenessFailedResult *result = [AAILivenessFailedResult resultWithErrorInfo:errorInfo];NSLog(@"Detection failed: %@, message: %@, transactionId: %@", result.errorCode, result.errorMsg, result.transactionId);// Close SDK page ...[rawVC.navigationController popViewControllerAnimated:YES];};*/// Demo: Push SDK page.[self.navigationController pushViewController:vc animated:YES];/*// Demo: Present SDK page.UINavigationController *navc = [[UINavigationController alloc] initWithRootViewController:vc];navc.navigationBarHidden = YES;navc.modalPresentationStyle = UIModalPresentationFullScreen;[self presentViewController:navc animated:YES completion:nil];*/} else if ([checkResult isEqualToString:@"LICENSE_EXPIRE"]) {// "LICENSE_EXPIRE"} else if ([checkResult isEqualToString:@"APPLICATION_ID_NOT_MATCH"]) {// "APPLICATION_ID_NOT_MATCH"} else {// other unkown errors ...}

Error code

The errorCode values of AAILivenessFailedResult are as follows:

| errorCode | raw native sdk code | Description |

|---|---|---|

| PREPARE_TIMEOUT | fail_reason_prepare_timeout | Timeout during the preparation stage |

| ACTION_TIMEOUT | fail_reason_timeout | Timeout during the motion stage |

| MUTI_FACE | fail_reason_muti_face | Multiple faces detected during the motion stage |

| FACE_MISSING | fail_reason_facemiss_blink_mouth | Face is missing during the motion stage(blink or open mouth) |

| FACE_MISSING | fail_reason_facemiss_pos_yaw | Face is missing during the motion stage(pos yaw) |

| MUCH_ACTION | fail_reason_much_action | Multiple motions detected during the motion stage |

| USER_GIVE_UP | user_give_up | The user clicked the top left back button during the detection process |

| NO_RESPONSE | Network request failed | |

| UNDEFINED | Other undefined errors | |

| ...(Other server side error codes) |

Customizable UI

Action Detection Sequence Customization.

The number of

detectionTypeis 2 in defaultdetectionActionsarray and the order of motions is random. You can also specify the motion detection sequence yourself, as the sequence and number are not fixed. One example is as below:viewController.detectionActions = @[@(AAIDetectionTypeMouth), @(AAIDetectionTypeBlink), @(AAIDetectionTypePosYaw)];//orviewController.detectionActions = @[@(AAIDetectionTypeBlink)];//orviewController.detectionActions = @[@(AAIDetectionTypeBlink),@(AAIDetectionTypePosYaw)];UI Cutomization.

File name File description Customizable description AAILivenessViewController.m Detection page You can implement custom UI and other logic by inheriting from this class. See the demo project for how to customize the UI. AAILivenessResultViewController.m Detection result page All UI elements can be customized. If necessary, you can implement the detectionSuccessBlkanddetectionFailedBlkofAAILivenessViewController.hto enter your own app page instead of this result pageAAIHUD.m Activity Indicator View All UI elements can be customized. If necessary, you can implement the beginRequestBlkandendRequestBlkofAAILivenessViewController.hto show your own activity indicator viewFor more information on how to customize the UI, please refer to the question in the FAQ.

Resources File Customization. Currently, this SDK supports English (en), Simplified Chinese (zh-Hans), Indonesian (id), Vietnamese (vi), Thai (th), Mexican (es), Bahasa Melayu (ms) and Hindi (hi). The SDK will use the corresponding resource file according to the current language of the device. If this device language is not in the above languages, the SDK will use English by default. If you want to support only a fixed language, you can set the

languageproperty ofAAILivenessViewController. The resource files corresponding to these languages are in the following bundles:Name Description Customizable description AAILanguageString.bundle Muti-language bundle Can be modified directly AAIAudio.bundle Audio bundle Use your audio file to replace it. NOTE: the audio file name cannot be changed AAIImgs.bundle Image bundle Use your image file to replace it. NOTE: the image file name cannot be changed AAIModel.bundle Model bundle Cannot be modified

FAQ

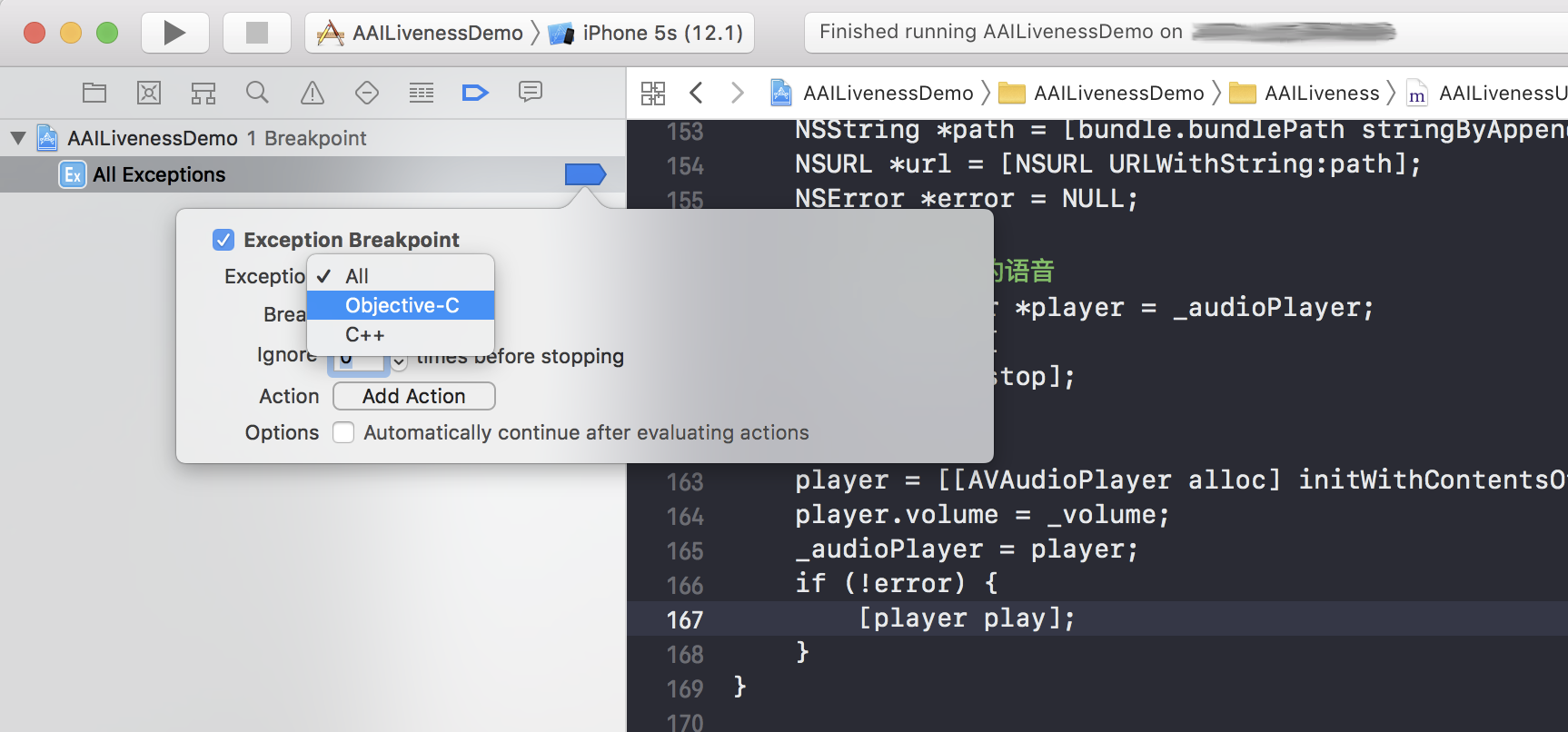

If you add an

All Exceptionsbreakpoint, the code could stop atplayer = [[AVAudioPlayer alloc] initWithContentsOfURL:url error:&error];, Line 163 of fileAAILivenessUtil.m. during face detection actions. The solution is to findAll Exceptionsbreakpoint and edit the exception type from "All" to "Objective-C", as shown by figure below.

Can I customize the UI? Yes. From 1.2.3 or higher,

AAILivenessViewControllerprovides some public interfaces and properties, see demo project for how to customize UI or logic. For 1.2.2 or lower,AAILivenessViewControllerdoes not provide an extensible UI, so you need to modify the source code to meet your requirements. In addition,AAILivenessResultViewControlleris just a display result page. Normally, you can implement thedetectionSuccessBlkanddetectionFailedBlkof classAAILivenessViewControllerto jump to your own app page instead of theAAILivenessResultViewControllerpage.If I have modified the UI part of the code, how do I upgrade to the new version of the SDK? Usually, only

AAILivenessSDK.xcframeworkwill be updated when the SDK is updated, the external open source code will not be modified. If the external open source code is modified in the new version of SDK, there will be detailed instructions in the release log, so you need to read the release log carefully to see if the external code has been modified.Error: Access for this account is denied You need to bind your app bundleId to the accessKey secretKey on our CMS website.(Personal Management -> ApplicationId Management)